One of the ways that investors hurt themselves is by chasing performance. Unfortunately, many financial services organizations enable this practice by focusing on short-term performance.

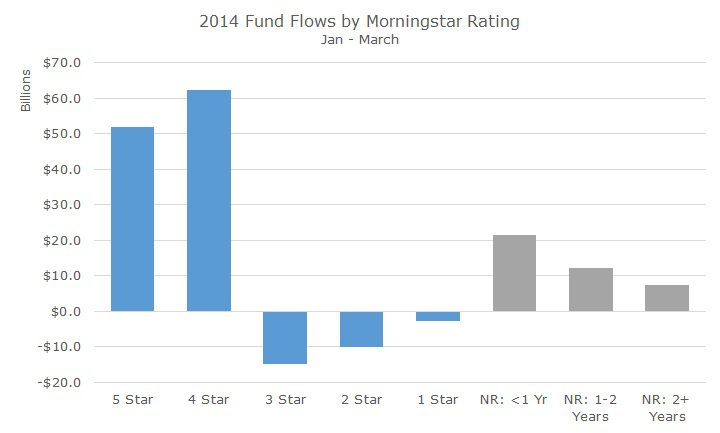

Recently, I saw some data that showed flows into mutual funds and exchange traded funds (ETFs) based on their Morningstar star rating.

The chart is self-explanatory except for the columns labeled NR, which means ‘Not Rated.’ To earn a Morningstar star rating, you need at least three years of performance. I’m not sure why they broke out the NR funds by year, but it was interesting because presumably it shows that investors are interested in hot, new products.

We’ve written about how the star system works, but if you want to refresh your memory, the detailed explanation from Morningstar can be found here.

The quick explanation is that funds are ranked by category (large cap growth, corporate bonds, etc.) on their risk/return numbers and then stuffed into a distribution so that the best 10-percercent of funds are awarded 5-stars and the worst 10-percent of funds are bestowed the kiss of death 1-Star rating.

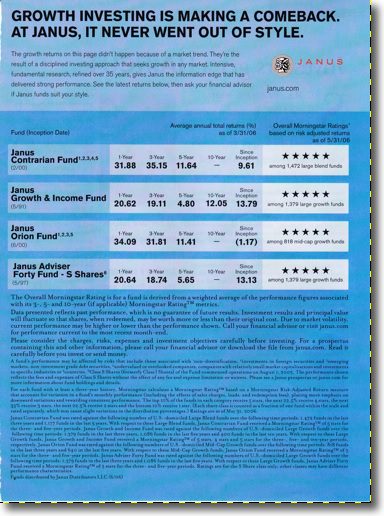

Part of the reason that investors appear to pay attention to the ratings is that fund companies are happy to promote their rankings, when they look good.

Consider this advertisement from Janus. I’m not picking on Janus, it just happened to be one of the top results on a Google Image search for ‘mutual fund advertisement.’

The image isn’t great, but you can certainly see the highlight of the ad: that these four funds earned five stars.

I’m not familiar with the Janus line-up of funds, but I am betting they had more than four funds when they ran this ad, and I assume that the bottom half of the page that is dedicated to mice-type disclosures explains how they did their cherry-picking.

Vanguard, one of my favorite fund companies because they are so darn responsible, did a study that showed the subsequent performance of funds based on their star ratings. It’s almost comical that the 5-star funds had the worst performance, which got better with each star rating so that the 1-star funds had the best performance.

I’m not suggesting that we go out and buy 1-star funds. I actually don’t care what the star-rating is for the funds that we use and we don’t look at the stars at all. I’m perfectly happy for not have 5- or 1-star funds because those are usually the most volatile around the benchmark.

It appears that we are in the minority, though, based on the fund flow data at the top. Maybe someday people will take a more disciplined approach, but I suspect that the problem is based in human nature and unlikely to change anytime soon.